Semantic Segmentation for Autonomous Vehicles

Implementing multiple segmentation architectures and comparing the results

Project Description:

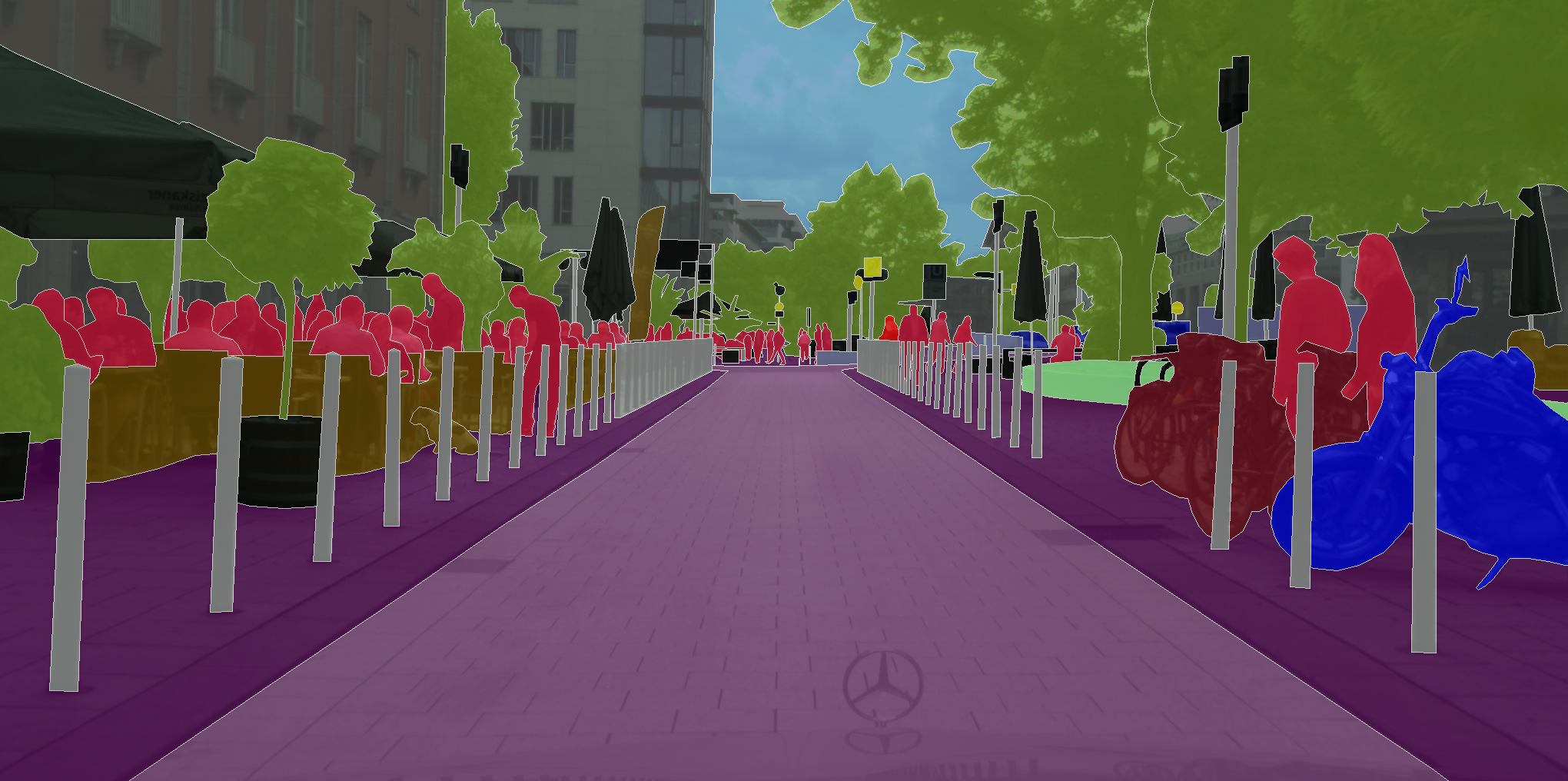

Autonomous vehicles are an incredibly large industry, with an international market size estimated at USD 121.78 billion in 2022. Scene understanding is extremely important for autonomous driving safety and consistency. Semantic segmentation is one technique used for scene understanding. Cameras record roads, pedestrians, buildings, and other important objects, and each pixel within an image is assigned to a different object class.

This is fundamentally a machine learning problem, as there is no known way to do this with non-deep-learning methods. However, even with deep learning, data becomes an issue. A lack of good data often limits deep learning models from performing well. Thus, the purpose of this project is to determine which segmentation models perform best given the same training and test data.

Method:

After significant literature review, a few models stood out. FCN, U-Net, U-Net++, DeepLab, GCN, and SegNet were among those that were interesting. All of these models are filled to the brim with convolutional neural networks, which are able to extract data through a variety of different filters.

The chosen dataset was a subset of the CityScapes dataset. 2600 images were used for training, 300 for validation, and 500 for testing post-training.

U-net, U-net++, and SegNet were tested to see which would perform the best. Standard data augmentation techniques were used, including mirroring, darkening/brightening, and blurring images to bolster the amount of training data.

After a few hours of training, the following training and validation losses were presented by Tensorflow.

Pixel Accuracy was the chosen metric for comparison, and the following pixel accuracies were calculated:

The U-Net++ ended up having the best results, followed by U-Net and SegNet. However, at the same time, the U-Net++ had the most trainable parameters and also took the longest to train. So, it’s definitely a tradeoff. However, because training only happens once and memory is not too much of a problem nowadays, U-Net++ is the segmentation algorithm to use!